FPGA designers face increasing challenges with time-to-market due to the combination of increased FPGA complexity and performance. This often moves the engineer away from hand crafting each element of the design and toward using IP cores provided by device vendors and other third-party suppliers. Using off the shelf IP frees the engineer to focus on the development of custom blocks, which are critical for the application and where their special value-added knowledge is applied.

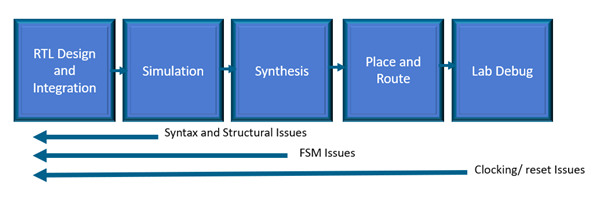

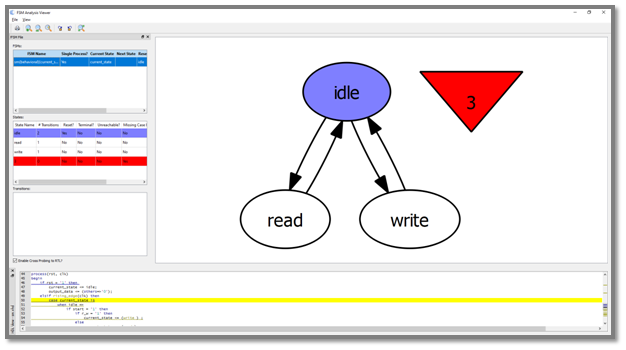

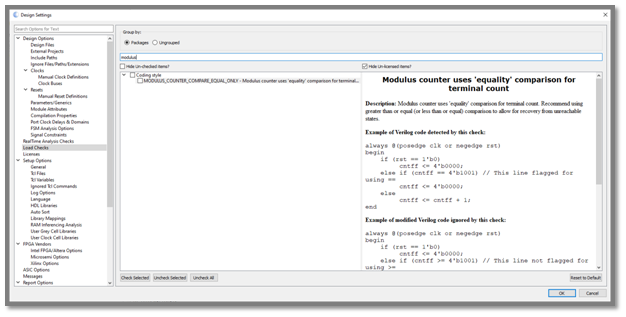

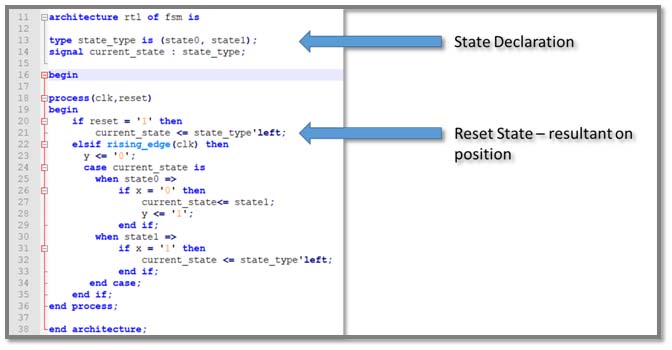

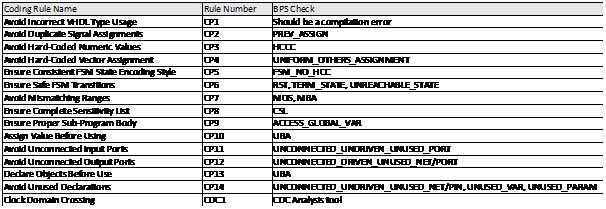

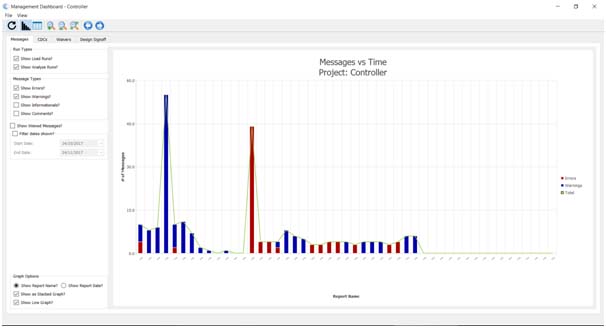

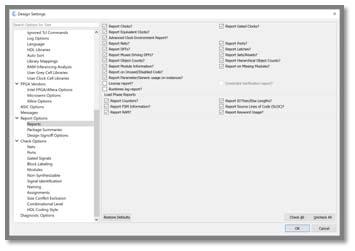

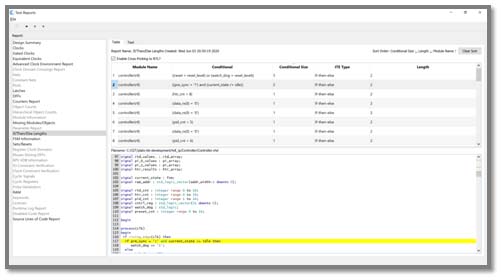

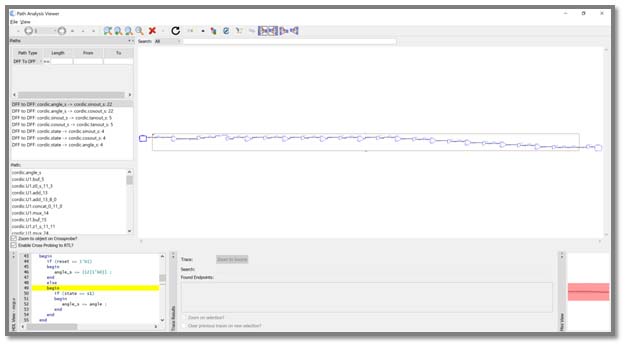

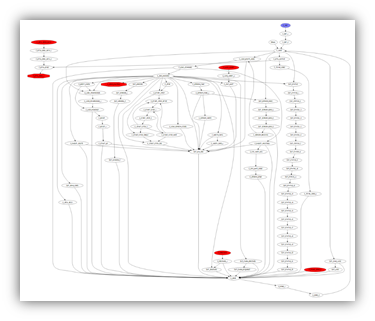

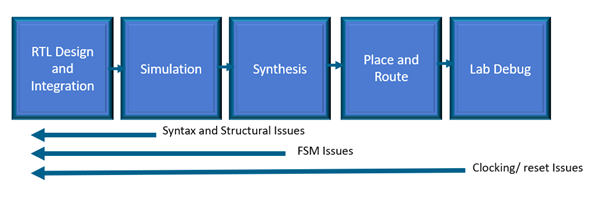

Blue Pearl’s Visual Verification™ Suite helps developers address these time-to-market and complexity challenges. The suite enables the engineer to achieve tighter development time scales, by identifying issues with custom developed RTL, such as FSM errors, bad logic or design elements which may impact performance in simulation or implementation. With the suite, issues are found early in the design cycle, as you code, not late in the design or worst, in the lab.

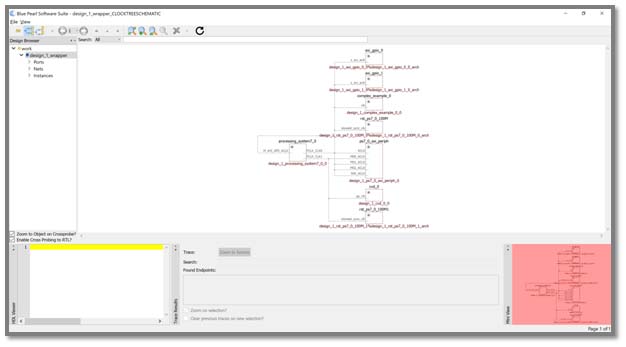

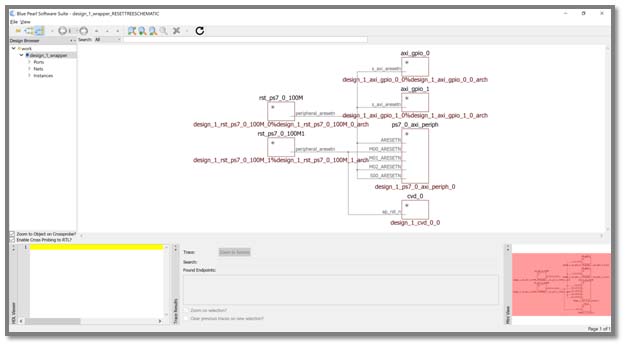

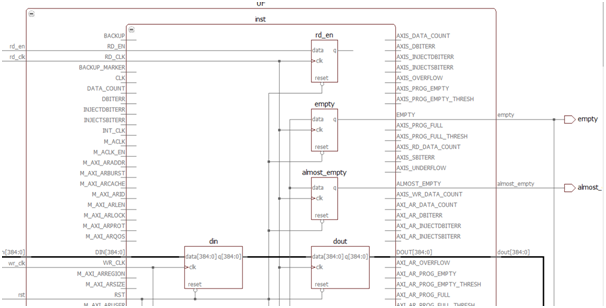

In addition, the Visual Verification Suite can analyze the complete design to ensure there are no accidental Clock Domain Crossings (CDCs) introduced between custom IP blocks and vendor or third-party IP.

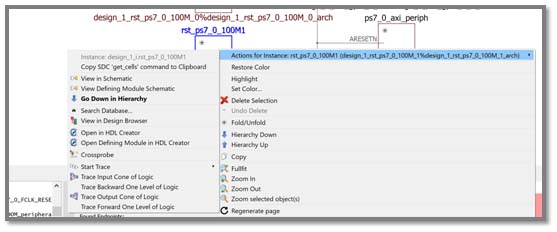

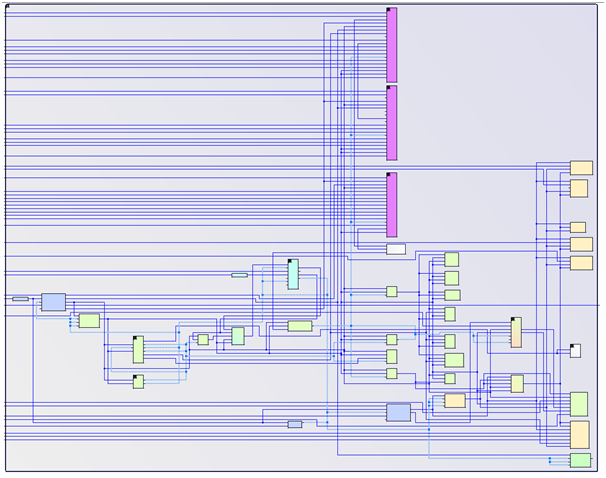

Working with vendor and third-party IP can be a challenge, especially when interfacing with tools like Intel® Quartus® Prime and its Platform Designer (formerly Qsys). This challenge can occur as the architectural diagram is created visually and pulls together several RTL files, typically encrypted, to correctly describe the IP modules and the overall architecture. If not handled correctly the engineer could be swamped by messages when using static analysis tools like the Visual Verification Suite. These messages/warnings result from coding structures within the vendor and third-party IP encrypted blocks so the user can do nothing about them.

Here Blue Pearl’s patented Grey Cell™ technology saves significant time. As the project loads, the Visual Verification Suite is aware of the vendor’s IP libraries and loads a Grey Cell model in place of the IP Core. This model contains only the first rank of input flip flops and the last rank of output flip flops. Knowing this allows the suite to perform a clock domain crossing analysis at the top level of the design without the need to look in depth into the vendor or third-party IP. This also reduces the number of messages and warnings to only the ones of interest to the developer. (Learn more about Grey Cell technology www.bluepearlsoftware.com/files/GreyCell_WP.pdf)

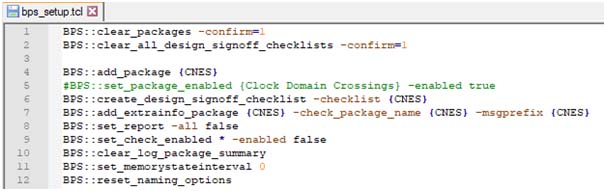

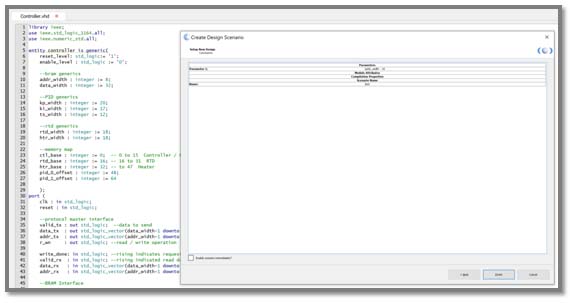

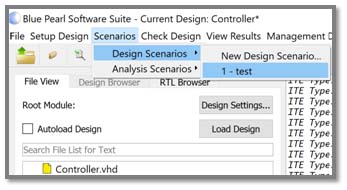

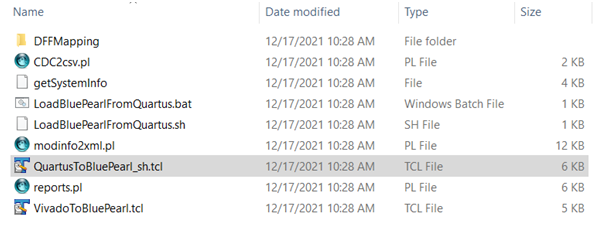

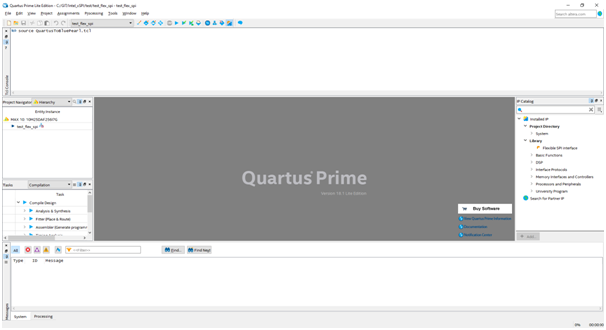

To be able to pull in the top-level design from Intel’s Platform Designer, Blue Pearl provides Tcl scripts in the installation directory which can be used in the Quartus Tcl window to extract the project and create a Tcl script which can be opened by the Visual Verification Suite.

Creating a Blue Pearl project Tcl script is as simple as copying the QuartusToBluePearl_sh.tcl script to the Quartus project area and then sourcing it in the Tcl console.

This will create a LoadBluePearlFromQuartus.tcl Tcl script which can then be opened by the Visual Verification Suite, and which contains all the elements and files required to get started with analysis.

Alternately, Quartus users can use the LoadBluePearlFromQuartus.sh or LoadBluePearlFromQuartus.bat Linux or Windows script to start Quartus in non-graphical mode and source the same script.

A similar flow is available for Xilinx® Vivado® users. Copy the VivadoToBluePearl.tcl script into the project directory and source it from the Vivado Tcl console to produce a LoadBluePearlFromVivado.tcl Tcl script.

Blue Pearl’s Visual Verification Suite, used early and often in the design process with Quartus Prime or Vivado, as opposed to as an end of design/sign-off only tool, significantly contributes to design efficiency, and quality, while minimizing chances of field vulnerabilities and failures.

To learn more about the Visual Verification suite, please request a demonstration https://bluepearlsoftware.com/request-demo/

Moore’s law foresaw the ability to pack twice as many transistors onto the same sliver of silicon every 18 months. Fast forward roughly 55 years, some experts now think Moore’s law is coming to an end. Others argue that the law continues with a blending of new innovations that leverage systemic complexity such as 2.5D and 3D integration techniques.

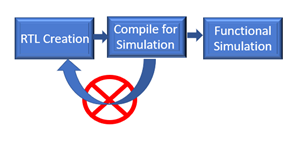

Moore’s law foresaw the ability to pack twice as many transistors onto the same sliver of silicon every 18 months. Fast forward roughly 55 years, some experts now think Moore’s law is coming to an end. Others argue that the law continues with a blending of new innovations that leverage systemic complexity such as 2.5D and 3D integration techniques. In addition, with static verification, 63% of trivial human errors or typostypically not found until simulation (sourceDVCon U.S. 2018) can instead be identified early as the design is being created. By “shifting verification left”, design teams have been proven to save time along with delivering on a much more predictable schedule.

In addition, with static verification, 63% of trivial human errors or typostypically not found until simulation (sourceDVCon U.S. 2018) can instead be identified early as the design is being created. By “shifting verification left”, design teams have been proven to save time along with delivering on a much more predictable schedule.